In recent years, deep learning has captured the attention of scholars, researchers and media.

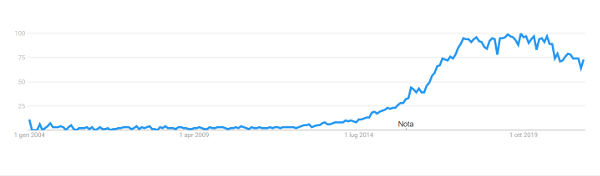

The search trend graph in Google for the terms “deep learning” over the last 17 years is indicative.

Deep learning search trend

There is a reason that explains this rise in interest in this topic, but before we focus on that, let’s try to explain what deep learning is precisely.

What is deep learning

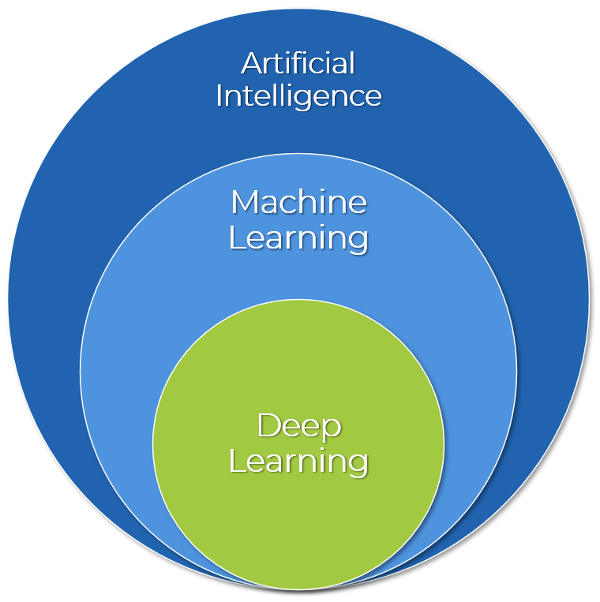

Often the terms deep learning, machine learning and artificial intelligence are misused, almost as if they were interchangeable.

In reality, these are completely different concepts, but somehow related to each other.

Deep learning is one of the methods of machine learning, through which it is possible to create what is called Artificial Intelligence.

The above mentioned can be defined as any technique that allows computers to mimic human intelligence, using logic, if-then rules, decision trees and machine learning.

Thus, Artificial Intelligence constitutes the ultimate goal with machine learning being the road to achieve this goal. This road involves the ability of machines to acquire data (lots of it) and learn automatically from it, through training models that allow to modify and adapt algorithms in accordance with the available information.

Machine learning includes abstract statistical techniques that allow machines to improve tasks through experience. There are several approaches to implement machine learning: one of them is deep learning.

Deep learning is thus a kind of subset of machine learning composed of algorithms that allow software to train itself to perform tasks (e.g., speech or image recognition) by exposing multilayered neural networks to a large amount of data.

Difference between Artificial Intelligence, Machine Learning and Deep Learning

As an example of another approach, we can cite Bayesian networks, which NeXT has used in the past for an AI algorithm dedicated to resolute quality problems during the manufacturing process.

Deep Learning takes advantage of architectures and mathematical models that go by the name of multilayer neural networks. These networks provide a large amount (we speak of tens to millions) of levels (or layers) of artificial neurons involved: that’s why we make use of the term “deep”. The goal of this system of neurons is to try to replicate the functioning of the human brain.

Deep learning: the enabling technologies

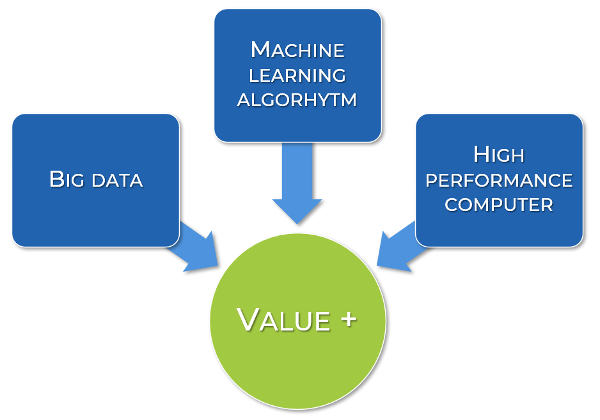

If Deep learning were a dish, we could try to provide the recipe to make it at its best.

Let’s try to do just that, also trying to understand why right now deep learning is exploding, both in the general interest, as can be seen from the image at the beginning of the article, and in the interest of the labor market, as evidenced by the multiplication of dedicated university courses (engineering, but also economic, social and humanistic subjects), which were born in recent times.

First ingredient: Big data

For several years, starting with what we all call Industry 4.0, i.e., the fourth industrial revolution, we have been hearing about Big data. We could say that it is effectively the data revolution. Data is of central importance today, also thanks to another enabler such as the Internet of Things, which has been instrumental in creating a connected and digital ecosystem, both in the everyday context of our lives and in the industrial context of factories.

It’s getting easier and easier to find data and make it flow: the amount of data is crucial, indeed necessary, to successfully exploit a deep learning model.

So the first step is to have an effective and efficient data acquisition system.

Second ingredient: algorithms of automatic learning

The algorithms of automatic learning serve to train the models using the great mass of data of which we have spoken in the previous paragraph.

The main difference between deep learning and a traditional algorithm is that the behavior of the latter is established by the programmer, who also provides the data (quantitatively limited) to be used: in a deep learning model, however, the algorithm learns (trains) from the data (in large quantities) on what kind of behavior to follow.

These algorithms can be written by using traditional programming languages such as C++, Python, Java, Javascript etc.: the theory and algorithms already existed for thirty years, but only after 2012 deep learning actually took off thanks to small but crucial improvements in the algorithm that allowed the use of multilayer neural networks (neural networks, previously, were still quite superficial, with only one or two layers of representation).

Other improvements that occurred between 2014 and 2016, now allow training to be performed from base models that have a depth of thousands of layers.

For this to happen, however, the third ingredient is required.

Third ingredient: computing power

The success of a good pizza depends on the temperature at which it can be cooked. For this reason, it would be difficult at home to make a pizza like in a pizzeria: the electric oven reaches a maximum of 250 °C, while the wood-fired oven reaches up to 400 °C.

So, in order to use Deep learning models, it must be possible to use a lot of computing power and memory (storage).

This element, in the past, has been a restraining element for the development of Artificial Intelligence. Today the power of computers allows the use of deep learning models, so much so that we, perhaps not always consciously, use artificial intelligence on a daily basis.

Let’s take a concrete example. In the field of natural language processing, we have made great steps, but the algorithms are more or less the same: there has been rather a great advancement of technology in the world of hardware and the ability to store data in the cloud.

The crucial factor has been the ability to use graphics processors (GPUs) in parallel with the CPU. This allowed to make computationally intensive operations.

It is clear that the most ambitious results will occur when, for example, even more powerful computers, such as so-called quantum computers, will be built.

To date, the capacity of a deep learning neural network is limited to a very specific goal: if it has to recognize a human face, it cannot be used to recognize the face of another species.

Basically, each artificial neuron responds to input data (synapses) with a “0” or a “1”. Based on this value received from the previous neuron, the synapse sends or keeps a signal to the neurons of the next layer, in charge to process the data they have received and transfer the output to the cells of the next layer.

The research is going in the direction of mimicking as much as possible the functioning of a human neuron, which is something more complex than the neuron of a deep learning network: it is not a single entity, but each neuron consists of a complex system of branches, with multiple sub-regions. The consequences are very interesting, because in this way the neuron could be considered as a sub-network, with all the implications that this entails.

The funnel of value

Artificial Intelligence: its applications in everyday life

We may not be aware of that, but we use artificial intelligence every day.

When we let the navigator guide us in our travels, when we do a Google search, when we shop online or interact with a smart voice assistant, we are using artificial intelligence.

Wanting to list some areas where deep learning is being used today, we can mention for example:

- Natural language processing (NLP): it refers to the computer processing of natural language. This is distinct from formal language, of which computer language is also a part. The interest in this topic has grown exponentially in recent years dragged by the explosion of mobile devices. The complexity of the work in the field of natural language, lies in the fact that compared to formal language, this is much more complex as it contains sub-understandings and ambiguities, which makes it very difficult to process.

- Computer vision: part of this field is the emerging autonomous driving and the processing of decisions and the right actions to be taken in real time.

- Robotics: deep learning is enabling the creation of increasingly autonomous robots equipped with NLP and computer vision systems for image recognition, tactile and olfactory sensory processing.

- Recruiting: today, artificial intelligence is being used to select the best candidate to hire: artificial intelligence can quickly choose from hundreds of CVs, based on multiple parameters deemed important.

- Purchase suggestions: Artificial Intelligence can learn to know each Internet user in an increasingly detailed way, coming to understand and even predict their purchasing tastes and desires.

- Medical diagnostics: deep learning goes to greatly enhance what every single doctor already does: making decisions based on their own knowledge and experience. It can work in both the field of medical diagnostics and quality control in pharmaceutical manufacturing.

The use of deep learning in the industrial world

In the industrial world, Big data and the Internet of Things have been fairly familiar concepts for several years. These two topics have always been listed among the enabling technologies for Industry 4.0.

The digitization process that many companies in this sector have been undertaking for some time is creating an ideal ecosystem ready for the exploitation of deep learning models.

Artificial Intelligence is absolutely synergistic with the concept of Internet of Things, so much so that it is not wrong to think of these two themes in the same way as we approach the brain (AI) and the body (IoT).

In the “Industry 4.0” era, the concept of Internet of Things has become an integral part of any production process. We are surrounded by “smart” devices that can communicate with each other, in the plant and between plants, in a hyper-connected environment: PLCs, sensors, servers, wearable devices.

Data travels fast, everywhere.

The uses where deep learning brings a greater added value see the implementation of models for predictive maintenance, to greatly improve the efficiency of the production process, guiding the decision-making process of managers.

Let’s go into detail.

-

Predictive maintenance: the subject of maintenance is a very hot topic. Its progressive digitalization allows the use of increasingly advanced tools that aim to manage all the types of interventions and to completely eliminate the reactive ones (i.e., “sudden” failures).

How is this possible? By using deep learning models within advanced software systems (e.g., a Machine Ledger). These models are capable of monitoring thousands of variables, and automatically schedule a maintenance event at the precise instant that an “out of control” variable value emerges.

The consequence of using artificial intelligence for maintenance is that the components of a machine are exploited as much as possible, but are replaced before they cause sudden downtime. We therefore exploit the entire life cycle of each component, while also limiting the amount of waste produced each year. A positive environmental impact.

In this way, the concepts of preventive or proactive maintenance are overcome in favor of predictive maintenance. - Improving production efficiency: in a digitized ecosystem, where data flows from field devices to software systems that must structure and organize those data, the ability to implement deep learning models exponentially improves the effectiveness of simple mathematical models, otherwise used.

If you’re interested in the themes that we’re covering, follow and text us on Linkedin: we’d like to know YOUR opinion

You may want to use something even quicker, in that case, follow us on Telegram!

Recent Comments